Intel AERO auto flight (position control mode)

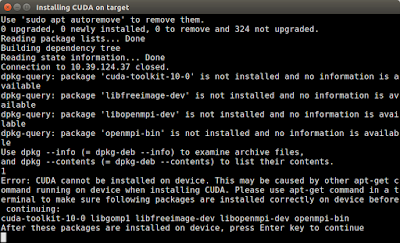

1. Build a stable visual odometry system (ex: stereo visual odometry) 2. Send the local position to flight controller follows the PX4 coordinate system [1] *PX4 coordinate system [2] : 3. Modify RC mode & position control mode parameters RC mode: 0: Stabilized mode 1: Position mode 2: Altitude mode Position control mode parameters: 4. Active stereo visual odometry system (ssh to TX2 ) and then switch to position control mode. 5. Takeoff and keep the drone manually and try to leave hand on the remote controller. Experimental result: *Parameters for LPE: 1. LPE_FUSION: 4 2. ATT_EXT_HDG_M: 1 3. LPE_VIS_XY: Min value 4. LPE_VIS_Z: Min value Reference: [1] https://chuangrobot.blogspot.com/2018/12/communicate-with-intel-rtf-flight.html [2] https://dev.px4.io/en/ros/external_position_estimation.html [3] https://dev.px4.io/en/advanced/switching_state_estimators.html [4] https://dev.px4.io/en/ros/external_positi...