Tips for using imu_utils for imu calibration

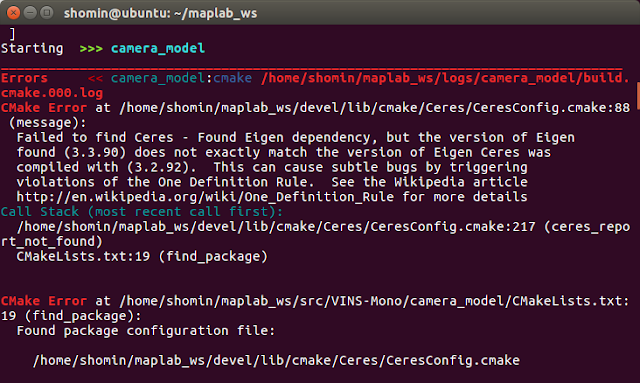

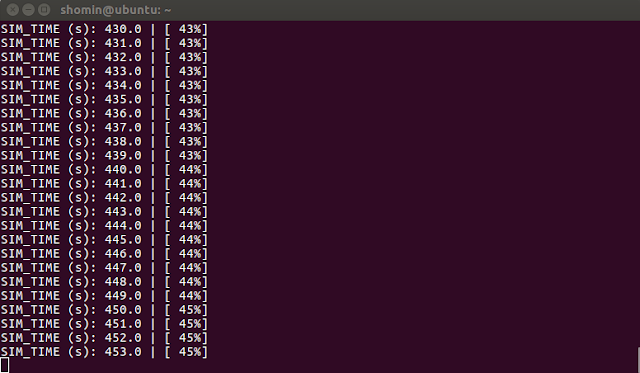

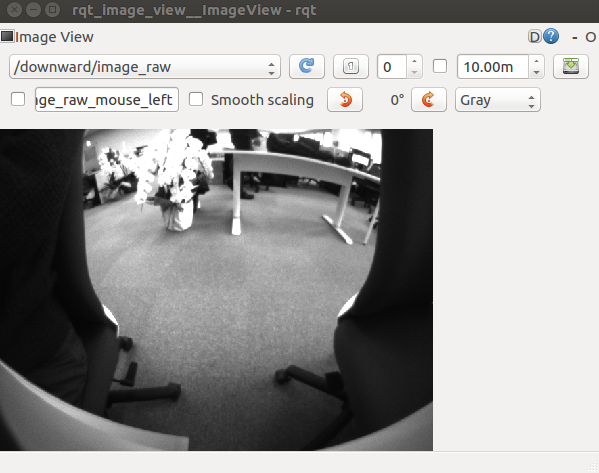

1. Install Ceres * Dependencies: $ sudo apt-get install liblapack-dev libsuitesparse-dev libcxsparse3.1. 4 libgflags-dev $ sudo apt-get install libgoogle-glog-dev libgtest-dev * Eigen version should be newer than 3.3.4. * Download the version ceres-solver-1.14.0.tar.gz directly. 2. Build code_utils * Dependency: sudo apt-get install libdw-dev * Build the source code in the ROS_WORKSPACE * Modify file: catkin_ws/src/code_utils/src/ sumpixel_test.cpp #include "backward.hpp" -> #include “code_utils/backward.hpp” 3. Build imu_utils * Build the source code in the ROS_WORKSPACE 4. Run the calibration * Create your own .launch file. (assign the topic name, time duration ...) * roslaunch imu_utils XXX.launch first and then rosbag play -r 200 XXX.bag ***Don't forget to source setup.bash file ! Reference: [1] https://github.com/gaowenliang/imu_utils [2] https://zhuanlan.zhihu.com/p/151675712 [3] https://blog.csdn.net...